API Connector Documentation

Import Google Search Console Data to Sheets

In this guide, we’ll walk through how to pull Google Search Console data data directly into Google Sheets, using the API Connector add-on for Sheets.

Contents

- Before You Begin

- Part 1: Connect to the Google Search Console API

- Part 2: Pull Data from Google Search Console to Sheets

- Part 3: Create a Custom API Request

- Part 4: Handle Pagination

- Part 5: API Documentation

Before You Begin

Click here to install the API Connector add-on from the Google Marketplace.

Part 1: Connect to the Google Search Console API

The easiest way to get started with the Google Search Console API is through API Connector’s built-in integration.

- In Sheets, open API Connector and create a new request (Extensions > API Connector > Open > Create request)

- Select Google Search Console from the drop-down list of applications

- Under Authorization, click Connect to Google Search Console

- You will be directed to google.com and asked to allow API Connector to view your Google Search Console data. Click Allow.

- You'll then be returned to your Google Sheet, and can verify that your Google Search Console connection is active.

Part 2: Pull Data from Google Search Console to Sheets

Now that we’re connected, let’s pull some data into Sheets.

- Under Endpoint, choose the"Get search analytics" endpoint.

- Under siteUrl, click the refresh icon and select an available site.

- Now fill in a date range (required), and any optional parameters. Here I've selected

queryto retrieve a list of keywords for my site. - Set a destination sheet, name your request, and hit Run.

Part 3: Create a Custom API Request

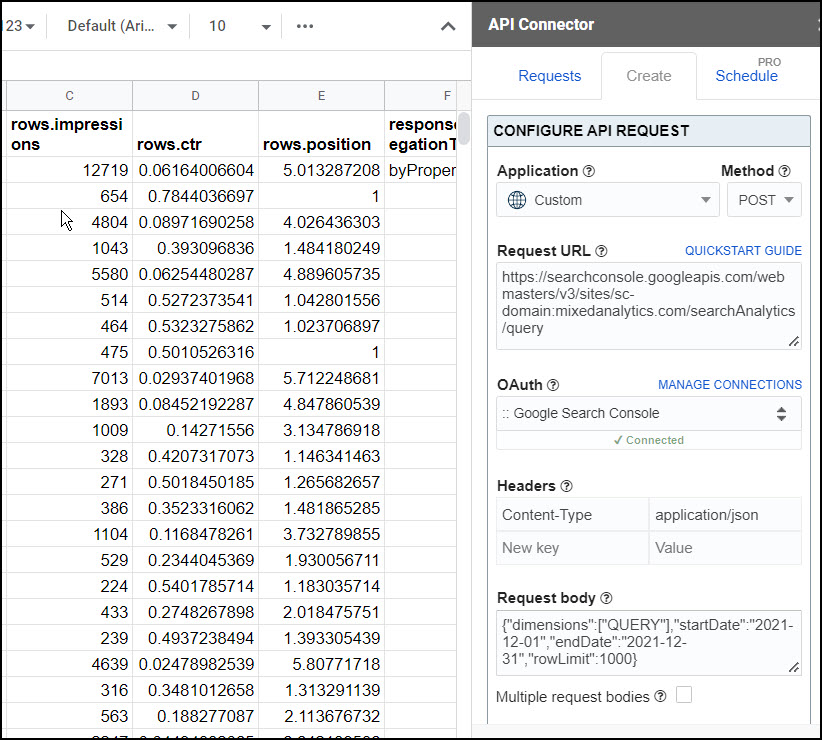

Alternatively, you can run a custom request instead of using API Connector’s built-in integration, using any of the parameters shown in Google Search Console's API documentation.

Here is an example setup. If you're using a http property, fill in the siteUrl using the syntax https%3A%2F%2Fmixedanalytics.com (make sure to encode it first). If you're using a domain property, enter it like sc-domain:mixedanalytics.com instead.

- Application:

Custom - Request method:

POST - Request URL:

https://searchconsole.googleapis.com/webmasters/v3/sites/siteUrl/searchAnalytics/query - OAuth:

Google Search Console - Headers:

Content-Type:application/json

- Request body:

{"dimensions":["QUERY"],"startDate":"2021-12-01","endDate":"2021-12-31","rowLimit":1000}

Part 4: Handle Pagination

By default, Google Search Console will send 1,000 records unless you use the rowLimit and startRow parameters as described in their documentation.

In API Connector you can loop through these pages automatically with the following settings:

- Pagination type: offset-limit body

- Offset body parameter: startRow

- Limit body parameter: rowLimit

- Limit value: 25000

- Run until: choose when to stop fetching data

Part 5: API Documentation

Official API documentation: https://developers.google.com/webmaster-tools/v1/api_reference_index

Hi Ani,

It seems the connector is not working with Domain level authorization in Google Search Console.

Can you confirm, to know if we are wrong 🙂

I just tested and it worked on my domain property. You'll need to change "https://" to "sc-domain".

Is it possible to have relative start and end dates based on the current date?

Sure, you can make a Sheets formula like

=today()-1, and then use that cell in your requests.Hi there, just purchased and this looks great!

With my first test I noticed that only the top 1000 keywords / rows popped into Sheets. Is it possible to get the complete data set and not just the first 1000 rows? (still learning)

Thanks for your time.

Figured it out by checking the Google resource page listed and testing some different options... however a new wrinkle has popped up.

We work with some large websites that often go well beyond 25,000 rows of data in a given day / month. Some sites have over 100,000 pages.

Can you help me to understand how to run the following:

All Queries (by Page) with a start and end date... but pulling ALL rows ... even if it goes beyond the 25,000 row limit.

I am hoping a multi query type call is the answer.. but again.. I am a beginner and still learning the ropes 🙂

Thank you for your time and patience. This is super cool.

That's awesome you figured it out, I just edited the article to make it more clear, too.

Google limits each batch to 25,000 records, so to get more you need to pull multiple batches. Each batch has a start and end point defined by the "startRow" and "rowLimit" parameters. API Connector doesn't currently have a preset pagination option for this, so we'll use the "Multiple request bodies" function to achieve something similar. You'd set it up by pasting the following into the Request body input box:

{"startDate":"2021-01-01","endDate":"2021-10-31","dimensions":["query"],"startRow":0,"rowLimit":25000}:::BREAK:::

{"startDate":"2021-01-01","endDate":"2021-10-31","dimensions":["query"],"startRow":25000,"rowLimit":25000}

:::BREAK:::

{"startDate":"2021-01-01","endDate":"2021-10-31","dimensions":["query"],"startRow":50000,"rowLimit":25000}

(and so on...)

By doing this you can theoretically get all rows, but as you can see you have to manually add in the request bodies, for now there's no "get all" option. I also think you might have trouble because Sheets slows down once you start pulling in so many records, I'm not sure it can really handle multiple sets of 100,000+ records. But hopefully this helps you test what's possible. Just shoot me a message here or over at support and I'll be happy to help further.

Update: You can now do this automatically with our offset-limit body pagination option.

This worked great as a starting point! For very large sites I can pull a shorter time period. I tried 100k by Page, Query and it seemed to work ok.

Thanks again.

We've added an option to cycle through automatically using offset-limit body pagination handling, I've updated the article to show how it works.

Great extension!

I want to build a sheet that looks up how much Discover-traffic, Search-traffic and News-traffic a URL has gotten. I want to be able to paste a list of URL:s into the sheet and get the data for each URL.

I think I'm half way there, but I don't know how to tell the API to look for the URL in a specific cell of my sheet?

I guess I need to replace "expression":"jul" in the code below...

{"startDate":"2021-12-01","endDate":"2021-12-31","dimensions":["page"],"type":"discover","dimensionFilterGroups":[{"filters":[{"dimension":"page","operator":"contains","expression":"jul"}]}],"rowLimit":3000}Hey there, please see this article, it shows how to use cell values in your request.

I read through you post, but don't understand how to referens a url or ist of url:s in the sheet. Tried this, but it didn't work:

{"startDate":"2021-12-01","endDate":"2021-12-31","dimensions":["page"],"type":"discover","dimensionFilterGroups":[{"filters":[{"dimension":"page","operator":"contains","expression":"+++QuerySheet!A2+++"}]}],"rowLimit":3000}What am I doing wrong?

That looks fine, are you referencing the correct location? (cell A2 in a tab called QuerySheet)

Feel free to contact support if you'd like me to take a look at your sheet.

Yay! It worked. Thank you!'

Hi,

I would like to be able to import Google search results for Shared google sheets or shared google stock sheets . for example when I use url https://www.google.com/search?q=docs.google.com/stock+spreadsheet/edit+site:docs.google.com&lr&safe=active&as_qdr=all&start=0&ved=2ahUKEwjG67S_x6n1AhUhUGwGHSLbCl04ZBDy0wN6BAgBEDY&dpr=1.25, it displays About 3,520 results (1.26 seconds) . How do I use this connector to import the results into google sheets.

Google Search Console is for getting search performance statistics for your own website. If you're looking for Google search results for a keyword you should check out the Google Custom Search API instead. This article provides a tutorial on how to access it.

Hey! I've tried the multiple request bodies as outlined in the conversation with Daryl above but I continue to be limited to 5000 records in return. Has Search console changed their record limits?

You should be able to get 25,000 records in one go. Can you add in a row limit of say 6000 to your request? If you still only get 5000 records then, it may be that your data doesn't contain more records than that.

Thank you so much for this! This is so cool and fun to play around with. I have a question around aggregating queries per page, but limiting the number of rows returned per page based on click count..

I figured out the aggregation per page part (thanks to your guide!) but I want to only return rows with clicks > or = 1 (so no queries with 0 clicks come in, even if they get impressions). Is there a way to do that?

Here's what I've got so far:

{"startDate":"2021-12-01","endDate":"2022-02-28","dimensions":["query","page"],"dimensionFilterGroups":[{"filters":[{"dimension":"page","operator":"contains","expression":"/path/page-example/"}]}],"rowLimit":3000}Where can I put the click value > 0 (if it's possible)? Thanks for this amazing guide!

Thank you, I'm glad you are having fun! 🙂 And that's such a good question. I thought there must be a way to do this since you can add a > 0 filter in the interface, but I just checked and as far as I can tell, the API only allows filtering on dimensions (query, page, device, or country). That's too bad, but you still have some options:

1) simply filter out those "0" rows with a Google Sheets filter.

2) use a JMESPath filter. Just copy/paste this expression into the JMESPath field to include only those records where clicks are greater than 0:

rows[?clicks > '0']Awesome, thanks so much for the quick response!

I have hundreds of domains in my GSC account that I need to delete. Doing it manually is PAINFUL. What is the best way to do this with API Connector from Mixed Analytics?

Hmm unfortunately our preset connection only enables the /auth/webmasters.readonly scope. You would need to create a custom OAuth connection that enables the /auth/webmasters scope, i.e. write access. From there you could cycle through a list of websites and delete them all with a request like

DELETEhttps://www.googleapis.com/webmasters/v3/sites/+++siteUrls!A1:A300+++Hi Ana :),

Thank you so much for this addon.

Sorry for the question..

Is there a way to search GSC for indexation status of a url?

e.g.

mysite.com/firstpage.html

can I search GSC for several URLs to see if they are indexed?

Should I be using https://developers.google.com/webmaster-tools/v1/api_reference_index#Inspection_tools

Not sure how to form this within google sheets 🙁

Thank you so much 🙂

Sure, I believe you can set it up like this:

Method:

POSTURL:

https://content-searchconsole.googleapis.com/v1/urlInspection/index:inspect?alt=jsonOAuth:

Google Search ConsoleHeaders:

Accept:application/jsonRequest body:

{"inspectionUrl":"https://mixedanalytics.com/api-connector/","siteUrl":"sc-domain:mixedanalytics.com"}(the siteURL will be

http://www.example.com/for a URL-prefix property, orsc-domain:example.comfor a Domain property.)If you'd like to reference multiple URLs from your sheet, you can run a multi-query request with multiple request bodies.

Hi,

Is it possible to filter out specific queries like brand name variations?

Sure, to do that you'll need to create a custom request so you can add in filters to the request body. Here's an example:

{"dimensions":["QUERY"],"startDate":"2022-01-01","endDate":"2022-02-28","rowLimit":1000,"dimensionFilterGroups":[{"filters":[{"dimension":"QUERY","expression":"brand1|brand2","operator":"EXCLUDING_REGEX"}]}]}This filter uses regex to exclude whatever you substitute in where it says

brand1andbrand2. (If you prefer to use an operator other than a regex exclude, the other available options are EQUALS, NOT_EQUALS, CONTAINS, NOT_CONTAINS, INCLUDING_REGEX).Update: We've added the

dimensionFilterGroupsparameter into the preset integration so this no longer requires a custom request.Can I use the Google Search Console API to query mobile usability test results for multiple URLs?

Yes, but the Mobile Friendly API seems to require an API key rather than OAuth (info). Get your key as described here, then plug it into a request like this:

POSThttps://searchconsole.googleapis.com/v1/urlTestingTools/mobileFriendlyTest:run?key=yourAPIkeyContent-Type:application/json{"url":"https://domain1.com"}:::BREAK:::{"url":"https://domain2.com"}:::BREAK:::{"url":"https://domain3.com"}Substitute in your own URLs where you see domain1, domain2, etc.

Hi, is there a month dimension or a way to aggregate data by month ? Thank you !

Hi Arnaud, unfortunately Google doesn't provide a month dimension for this API so you have 2 options: 1) include the

datedimension in your request, such that you retrieve metrics for each date. Then use a Sheets function or pivot table to aggregate the data by month yourself, or 2) set yourstartDateandendDateparameters to the start/end of the month, and run the query WITHOUT including thedatedimension. That will give you total metrics for that month, and you can then change the dates and re-run the query to fetch the next month of data, and so on.Hi there! What permission level do you need to access the data? I currently a full user on our site and the tool says I don't have the right permission.

If you have access via the Search Console interface (at any level -- full, restricted, or owner), then you should have access via the API. What is the exact error message you see? I would make sure that you're authenticated to the right account, and also that you're using the right syntax (i.e. "sc-domain:" for a domain property, "https" otherwise).

Thanks for the reply!

Here is the error:

1): Completed with errors- We received an error from googleapis.com (403) show response

{ "error": { "code": 403, "message": "User does not have sufficient permission for site 'sc-domain:redacted.com'. See also: https://support.google.com/webmasters/answer/2451999.", "errors": [ { "message": "User does not have sufficient permission for site 'sc-domain:redacted.com'. See also: https://support.google.com/webmasters/answer/2451999.", "domain": "global", "reason": "forbidden" } ] } }

Hi Allie, thank you for sending on. Can you please try the following?

1) disconnect and reconnect, making sure you're authenticating with the email address you use with Search Console. This is to make sure you're logging in with the right email address, in case you have a few.

2) using our preset integration, select and run a request to the

/sitesendpoint. This endpoint doesn't require any parameters so avoids any potential syntax errors, and also will return the exact URLs that you do have access to.Let me know if that works or you still have trouble connecting after that.

Thanks for the reply!

I’m using the right email and the right website to pull the data but it still says I don’t have the right permission

You received that error even from the

/sitesendpoint? In that case, I'm not sure what the issue could be... I tested on my side with some different email addresses and it always worked. If you search Google for "User does not have sufficient permission for site", you can find many people with the same issue, but as far as I can tell the solutions presented are also just to make sure you have access to the website and have entered it using the right syntax. Please feel free to contact support with a screenshot of your setup, I can take a look and see if anything jumps out.Hi,

but how get only sum of clicks & impressions for each website on my Google Search Account? It is possible?

The preset Search Console integration currently only allows fetching data for one website at a time. However if you set up a custom request, you can list out multiple URLs, one for each site, such that API Connector cycles through that list and runs a request for each one (this is called a multi-query request).

Hi, thanks for your work.

Starting from a list of URL, is it possibile to have clicks of relative start and end dates based on the current date?

Hey Antonio, if I understood correctly, you're asking how to use dynamic dates. You can do this by creating relative date cells in your sheet, e.g. create a tab called Dates and enter the function

=text(today()-7,"yyyy-mm-dd")into cell A1.Then in the startDate parameter, you can reference that cell by entering

+++Dates!A1+++. Every time the request runs, the start date will always be today - 7 (or whatever value you use in your function). Please let me know if that answers your question!Hi Ana, thanks for your reply, it answers in part to my question. I also wanted to know, how can i obtain clicks and impressions from GSC API starting from a list of URL.

Basically I want to check performance of a list of URL before and after the refresh date of the contents. Thanks again

You can use a filter if they all match a certain pattern, or you can set the

dimensionsparameter to "page". That will list all the pages (URLs) on your site along with clicks & impressions. You can then filter that list in Sheets or use VLOOKUP to pull in just the URLs you're interested in.Google allows for comparing one month versus another month or a period against a period. Is there a way to pull the data comparing two months? I know this is possible to do with the GSC API as other connectors are able to retrieve. It looks like there is just not an option in the UI to pass the comparison query to the API.

I don't see any way to do this via the API but you can check for yourself here: https://developers.google.com/webmaster-tools/v1/searchanalytics/query. That page lists all available API parameters. I see

startDateandendDatebut no parameters for selecting comparison periods. I can't speak for other connectors, but from what I've seen most of them aren't direct pipelines from the API, and instead pass/transform data through their own servers first, so they could be making 2 API calls and mapping them together in their own database before serving up the data. That's what I'd suggest here as well, though you'd need to do it using Sheets as your "database": make 2 separate API requests and group the results together into a summary table. You can make sure those 2 requests always use the same relative comparison period by referencing dynamic date cells in your sheet.Hi,

We're trying to export a list of all Not found (404) from Google Search Console. We've completed setup and connected to our GSC account.

Using the GSC, we can only export 1,000 URLs at once, we have 9,019!!

We can't see how to export all URLs using the tool. We've a good go, but can't seem to get it to work.

Any help would be very much appreciated.

Many thanks

Hey Rob, the Search Console API only provides 404 information using the URL Inspection method. Unfortunately this isn't very useful since I'm pretty sure you're looking to simply get a list of your 404 pages in a single call, but this method requires that you feed in each individual URL, run an API request for each one, and then check the returned PageFetchState value.

I suggest using Google Analytics (or a similar web analytics tool) instead, as usually the affected page title will contain "404" and you can then match those page titles up with their associated URLs. (If you don't already have that configured, you can check this article for information: https://www.analyticsmania.com/post/track-404-errors-with-google-analytics-google-tag-manager)

I have connected to Search Console but when I run it, it keeps saying permission, denided, ACCESS_TOKEN_SCOPE_INSUFFICIENT" etc. Can you please help? Search Console is connected!

Hi Franco! Can you please disconnect and reconnect Google Search Console, making sure to accept all requested permissions?

Please check here for more information: https://mixedanalytics.com/knowledge-base/api-connector-error-messages/#we-received-googleapis

Hi Ana!

I am not sure why, but the Connect to Google Search Console button is unclickable on the account we purchased the premium for. It is clickable on my other Google account. May I ask why please? the account email is UK.marketing@******group.com

Confirm that this email does have Full access to 2 GSC accounts.

Hi there, your account purchased the Pro plan which doesn’t enable OAuth connections like GSC. You can change to the Starter plan, which does, for no extra charge via your account portal (click Account > Manage Billing from the footer or main menu of API Connector).

Hey,

I started using the tool, but I need to pull in specific data, which I was hoping you could help me with.

I want to pull in the following data per URL:

- Number of rows with clicks

-Number of rows with 0 clicks

-Number of rows with 1k+ clicks

-Number of rows with 2k+ clicks

-Number of internal links to a page

-Position of the page

-CTR of the page

-Impressions per page

-Total Rows with clicks for that URL

Hi Bilal,

Go ahead and select the `searchAnalytics/query` endpoint using the built-in Google Search Console query builder in the Mixed Analytics side bar, add your siteUrl and a startDate and endDate, and select `page` as your dimension.

Some of the metrics you listed above will be available directly in your response. You will then want to set up separate calculations in Google Sheets (e.g. for number of rows with clicks, 0 clicks, 1k+ clicks etc). For example countif(B:B,">1000"), countif(B:B,"=0"), if column B contains the rows.clicks output.

Please note that "# of internal links" is not available in the GSC API: https://developers.google.com/webmaster-tools/v1/searchanalytics/query

Hi guys, great toll. Am quite new to API and using it. Currently our plan is export all the 16 months worth of data via API. We plan to do this in chunks. However, if/ when I request data from GSC API lets say for 7 days, is there way to double-check (failsafe), if we exported all the data from the API? Like a check of the amount of rows there exists in total between that time-range?

There isn't a way to check the expected number of rows via the API itself, but you might be able to manually check based on data or reports available in the Google Search console. Assuming you are using the searchAnalytics/query endpoint, make sure you are using pagination as described in the article above, with "run until" set to "response field empty" and "response field path" set to "rows".

Hello! I'm looking for ways to extract top keywords per URL - is there a way to do it using this connector?

Yes! The searchAnalytics/query endpoint will automatically return the top keywords. You can set rowLimit to specify how many results you would like.

How can I pull data so that each query + URL combination is on its own row?

Hi Zack,

To pull data so that each query and URL combination is on its own unique row, you need to group your request by both dimensions simultaneously.

In your API Connector request body, you should include both QUERY and PAGE within the dimensions array. The grouping of these dimension values creates a unique key for each result row, ensuring you see the specific landing page associated with each search term.

Your request body should look like this:

{

"startDate": "YYYY-MM-DD",

"endDate": "YYYY-MM-DD",

"dimensions": ["QUERY", "PAGE"]

}